Ready to revolutionize your data journey with Infoveave?

Recent Blogs

- How Data Automation And Data Engineering Are Transforming Healthcare

- How Data Automation Tools Are Transforming Manufacturing

- How Data Automation and Data Engineering Are Revolutionizing the Telecom Industry

- How Data Automation is Powering the Retail Energy Industry

- How Data Automation and Data Engineering Are Revolutionizing the Automotive Industry

Data Quality Checks and Best Practices

Every business decision today is guided by data. Whether it is forecasting demand, optimizing inventory, designing customer experiences, or meeting compliance standards, organizations rely on data to act with confidence. Yet, the usefulness of data is determined not by the volume collected but by the quality it maintains.

Poor-quality data does more than undermine insights; it erodes trust, leads to operational inefficiencies, and exposes businesses to risks such as financial discrepancies and regulatory penalties. On the other hand, data that is accurate, complete, consistent, and timely provides the foundation for reliable analytics and smarter decisions.

This is where a structured approach to data quality (DQ) checks becomes essential. Rather than treating quality as an afterthought, leading enterprises are embedding systematic checks into their data management frameworks. By doing so, they ensure data remains trustworthy across its lifecycle—collection, storage, analysis, and reporting.

What is Data Quality?

Data quality refers to the degree to which data meets the needs of its intended use. It is not defined by a single parameter but by a combination of attributes that collectively determine its reliability and usability. These attributes typically include:

- Accuracy – How well does the data reflect real-world values? For instance, a customer’s recorded phone number must match the actual number they use.

- Completeness – Are all necessary fields populated? Missing details like product SKUs or transaction IDs can stall operations.

- Consistency – Is data uniform across systems? A customer listed under different names in CRM and billing systems creates confusion.

- Timeliness – Is the data updated at the right intervals? Outdated records reduce decision-making relevance.

- Validity – Does the data conform to required formats and business rules? For example, an email address should include “@” to be valid.

High-quality data ensures that analytics deliver actionable intelligence instead of misleading outputs. Without it, even the most sophisticated BI platforms and AI models will produce flawed insights.

Best Practices for Data Quality Checks

Establishing effective data quality checks is not just about identifying errors, but about creating a structured approach that prevents problems from occurring in the first place. For organizations that deal with high volumes of data across multiple systems, adopting best practices ensures that data quality is not left to chance, but becomes a reliable and repeatable process.

One of the most important practices is to start by clearly defining what data quality means for your business. For some, accuracy is paramount; for others, completeness or timeliness may take precedence. By setting specific expectations—such as “customer phone numbers must include a country code” or “orders must not be logged without a payment reference”—organizations create a measurable standard against which data can be evaluated.

Another best practice is to automate data quality checks wherever possible. Manual reviews are not scalable when data flows in from hundreds of sources, often in real time. Automated checks, configured as rules within data platforms, can flag errors, duplicate entries, or missing values instantly. This not only reduces reliance on human intervention but also ensures that issues are caught early, before they cascade into downstream processes.

Consistency is also a crucial element. Data quality checks should be applied uniformly across all systems and data flows. Without this, businesses risk creating pockets of reliable data in some areas, while others remain error-prone. A consistent approach helps align different departments, so finance, sales, and operations are all working from the same standards.

Equally important is the practice of monitoring and continuously refining data quality checks. Business requirements evolve, data sources change, and compliance standards are updated. Checks that were sufficient last year may no longer be relevant today. Treating data quality as a “living” process ensures that rules remain aligned with business goals and regulatory requirements.

Collaboration also plays a significant role in successful data quality practices. Data owners, analysts, compliance teams, and IT should all have a voice in defining rules and validating results. This cross-functional alignment avoids situations where one department sets rigid standards that may not be practical for another, and instead ensures that rules are meaningful and widely adopted.

Finally, an often-overlooked best practice is to communicate the value of data quality to the business community at large. It is not enough for the IT team or data stewards to understand why checks are important. By making the outcomes visible—such as faster reporting, fewer billing disputes, or higher customer satisfaction—leaders can foster a culture where data quality is seen as everyone’s responsibility, not just a technical requirement.

Examples of Data Quality Checks

Establish a structured approach

-

Go beyond identifying errors to preventing problems before they occur

-

Make data quality a reliable and repeatable process across systems

Define what data quality means for your business

-

Decide whether accuracy, completeness, or timeliness takes priority

-

Set measurable standards, such as:

-

“Customer phone numbers must include a country code”

-

“Orders must not be logged without a payment reference”

-

Automate data quality checks - Manual reviews are not scalable for high-volume, real-time data - Automated checks help by: - Flagging errors, duplicates, and missing values instantly - Reducing reliance on human intervention - Catching issues early before they impact downstream processes

Maintain consistency across systems and data flows - Apply checks uniformly to avoid pockets of unreliable data - Align departments like finance, sales, and operations with shared standards

Monitor and refine checks continuously - Update rules as business requirements, data sources, and compliance standards evolve - Treat data quality as a “living” process that adapts to changing needs

Encourage cross-functional collaboration - Involve data owners, analysts, compliance teams, and IT in defining and validating rules - Ensure standards are practical, meaningful, and widely adopted

Communicate the value of data quality - Show tangible outcomes, such as: - Faster reporting - Fewer billing disputes - Higher customer satisfaction - Build a culture where data quality is seen as everyone’s responsibility

What Industries Need to Pay the Most Attention to Data Quality Rules and Checks?

Reliable data matters everywhere, but some sectors face higher stakes. Strict regulations, financial impact, and customer-facing operations make strong data quality rules essential.

-

Banking, Financial Services, and Insurance (BFSI)

Accurate, consistent data ensures smooth transactions and regulatory compliance (e.g., Basel III, GDPR). Even small errors can cause failed audits, penalties, or reputational damage. Key checks: duplicate customer records, transaction reconciliation.

-

Patient safety and research integrity depend on clean data. Duplicate IDs or incomplete records can be life-threatening. Hospitals need identity matching and record-completeness checks, while pharma firms require accurate trial data for approvals.

-

Product, pricing, and customer data must be precise to avoid stockouts, mispricing, and poor customer experiences. Checks for catalog consistency, pricing accuracy, and clean customer data safeguard sales and loyalty.

-

Massive volumes of customer accounts and billing transactions demand strict rules. Poor quality leads to billing errors, service disruptions, and churn. Focus on duplicate detection, account reconciliation, and service mapping.

-

Manufacturing and Supply Chain

Production depends on accurate supplier, parts, and inventory data. Gaps cause delays, compliance issues, and higher costs. Essential checks: supplier master data, inventory tracking, demand forecasting.

-

Meter readings, customer usage, and pricing must be reliable to prevent billing disputes and revenue loss. Apply strong checks for metering accuracy, billing integrity, and compliance reporting.

-

Public Sector and Government

Clean citizen and taxation data improves transparency and service delivery. Poor quality leads to misallocated resources and lost trust. Standardized rules for records, taxation, and benefits distribution are key.

Implementing Data Quality with Infoveave

Maintaining high data quality often feels like a resource-heavy task, but Infoveave makes it simpler to put effective practices in place. Rather than relying on scattered tools or manual checks, the platform helps organizations bring accuracy, consistency, and trust directly into their data processes.

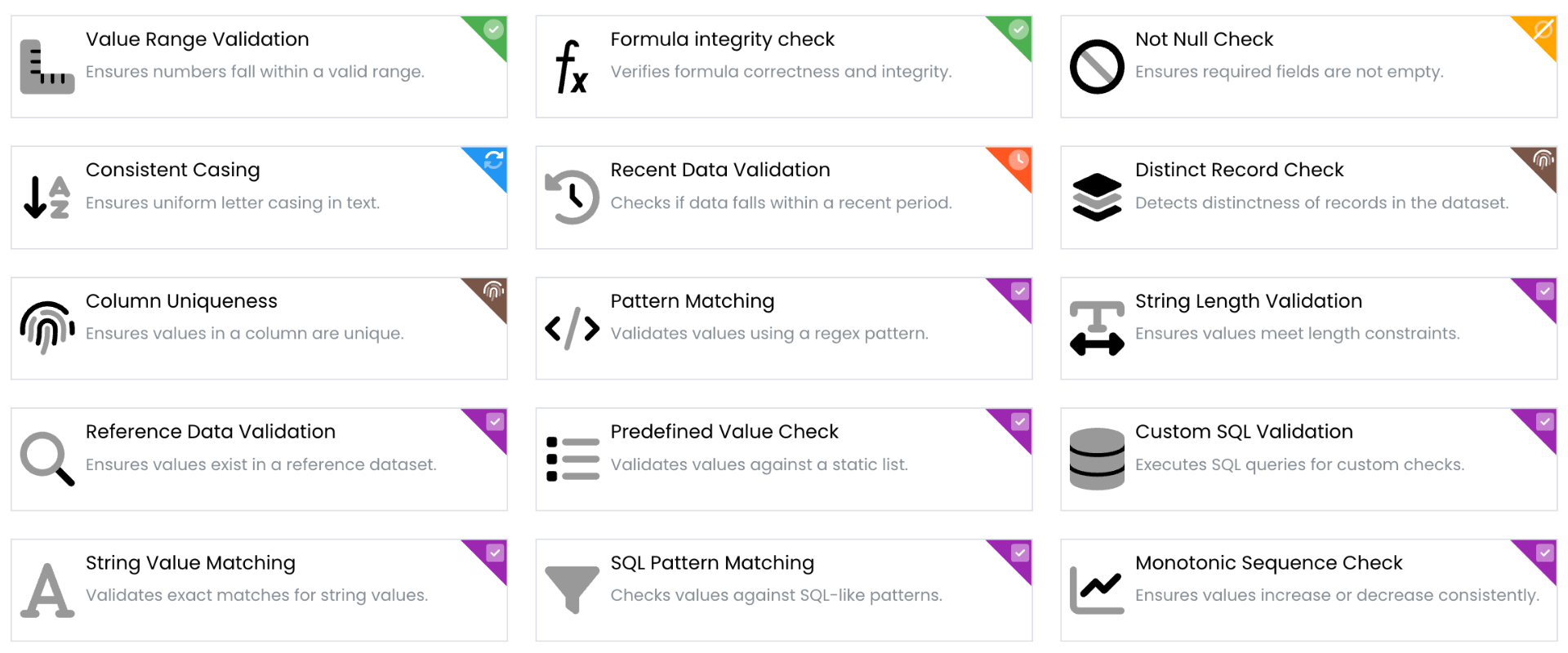

With Infoveave, teams can define rules to validate data for accuracy, completeness, and consistency across multiple systems. These checks can be automated, ensuring that errors or gaps are flagged in real time rather than discovered later during reporting. Standardization becomes easier as customer, product, financial, or operational data from different sources is harmonized into a single, reliable view.

The platform also embeds governance practices into daily operations, making it possible to align with compliance requirements without adding extra layers of complexity. This means businesses can confidently use their dashboards, reports, and analytics, knowing they are built on data that is dependable.

By treating data quality as an ongoing practice rather than a one-time project, Infoveave enables organizations to reduce the risks that come with poor data, improve efficiency in day-to-day operations, and ensure leaders have trustworthy insights for better decision-making.

Conclusion

Data quality is no longer optional—it’s the foundation that determines whether businesses can trust the insights driving their decisions. Poor-quality data introduces errors, inefficiencies, and compliance risks, while reliable data builds confidence and clarity across every department.

Implementing consistent checks and rules is an important first step, but sustaining high-quality data requires a platform that makes the process seamless. Infoveave enables organizations to manage, monitor, and improve data quality at scale, ensuring every dashboard, report, and workflow is powered by accurate and consistent information.

With trusted data in place, businesses can focus on what matters most: making informed decisions that drive growth and efficiency.