Ready to revolutionize your data journey with Infoveave?

Recent Blogs

- How Data Automation And Data Engineering Are Transforming Healthcare

- How Data Automation Tools Are Transforming Manufacturing

- How Data Automation and Data Engineering Are Revolutionizing the Telecom Industry

- How Data Automation is Powering the Retail Energy Industry

- How Data Automation and Data Engineering Are Revolutionizing the Automotive Industry

Data Quality Tools: Why are they important and what features to look for

Data is the backbone of modern business. From forecasting sales and optimizing supply chains to personalizing customer experiences and meeting compliance requirements, every decision today relies on data. But the value of that data depends on one critical factor—its quality.

When data is accurate, complete, and consistent, it empowers leaders to act with confidence. When it isn’t, businesses risk broken dashboards, compliance penalties, lost revenue, and dissatisfied customers. The challenge is that as organizations grow, so do their data ecosystems. Pulling information from CRMs, ERPs, eCommerce platforms, IoT devices, and countless third-party systems. With this scale comes complexity, and with complexity comes error.

This is why data quality has become a priority for enterprises across industries. Maintaining reliable, trustworthy data is no longer optional, it’s the foundation for effective decision-making, operational efficiency, and business growth. In this blog, we’ll explore what data quality is, why it matters, the essential features of a data quality tool, real-world use cases, and how Infoveave’s Data Quality framework helps businesses maintain trusted data for reliable insights.

What is Data Quality?

Data quality refers to the condition of data based on factors like accuracy, completeness, consistency, timeliness, and relevance. In simple terms, it answers one question - Can this data be trusted to make a business decision?

For example:

- If a retail company’s product catalog lists incorrect prices across online and offline channels, it risks revenue loss and customer dissatisfaction.

- If a hospital’s patient records are incomplete or inconsistent across departments, it can affect patient safety and compliance reporting.

- If a financial institution has duplicate or outdated customer data, it risks regulatory fines and poor customer experiences.

High-quality data ensures that insights reflect business reality. On the other hand, poor data quality creates ripple effects like broken dashboards, misleading reports, operational inefficiencies, and in many cases, reputational damage.

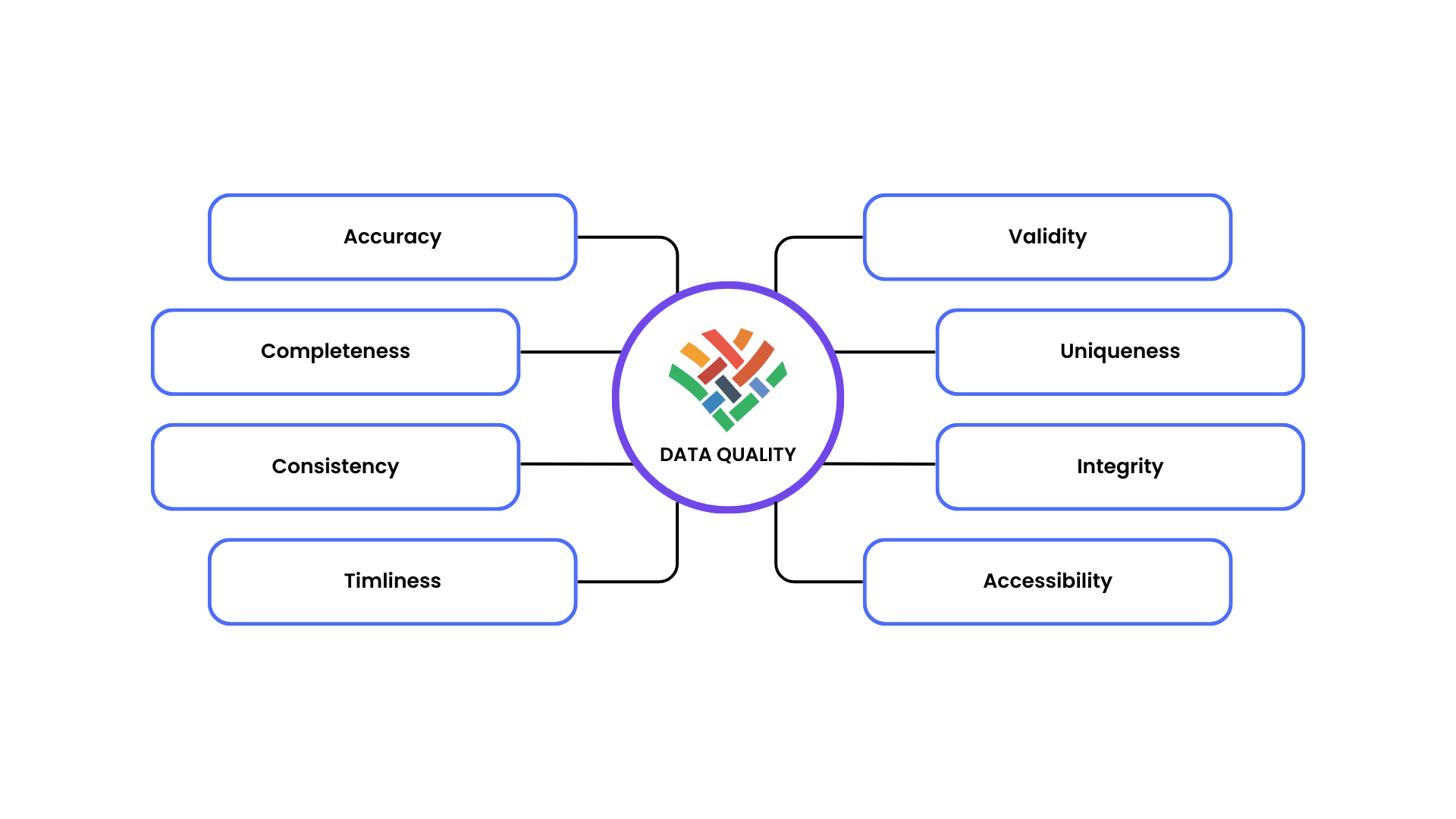

Key Dimensions of Data Quality

Accuracy

Accuracy ensures that the data reflects real-world information and is free of errors such as a customer’s address. Accurate data helps businesses generate dependable insights and enables better decisions.

Consistency

Consistency ensures that data is uniform across different sources. For example, a customer’s name must be the same in both the billing and CRM systems. Inconsistent data can create confusion and reduce trust.

Relevancy

Relevancy ensures that the data is appropriate and useful for the specified purpose. Essentially, it’s about having the right data for the right job. Relevant data can streamline operations, improve processes, and ultimately boost organizational performance. For eg, for a company that wants to improve its marketing strategies, sales data related to customer demographics and purchase history is highly relevant.

Completeness

Completeness ensures all necessary information is present. For instance, a sales record should include details like the product, customer, and transaction amount. Missing information can make the data less useful and harder to analyze.

Validity

Validity checks whether the data follows the required rules and formats. For example, dates should be in “YYYY-MM-DD” format, and phone numbers should have the correct number of digits. Invalid data can cause errors and slow down processes.

Uniqueness

Uniqueness ensures that data is not duplicated. For example, a customer should only have one profile in the system. Duplicate records can be misleading and lead to incorrect analysis.

Timeliness

Timeliness ensures that data is up-to-date and available real-time. For example, stock levels in an inventory system should reflect the current quantities. Outdated data can lead to poor decisions and missed opportunities.

Integrity

Integrity ensures that data relationships are accurate and well maintained across platforms by enforcing strict validation standards. For example, every order should have a valid customer ID that matches an entry in the customer database. Broken links in data can lead to incomplete or incorrect insights.

Why Are Data Quality Tools Important?

Modern businesses no longer deal with a single source of truth. Instead, they operate in complex ecosystems where data flows continuously from multiple touchpoints—ERP systems, CRMs, IoT sensors, eCommerce platforms, web analytics, customer service systems, and third-party applications.

This interconnected environment brings massive opportunity, but also complexity. Without a structured framework, data errors creep in—duplicates, missing records, schema changes, and incorrect transformations. These errors often go unnoticed until they reach the business intelligence layer, where dashboards break, or worse, mislead decision-makers.

The Role of Data Quality Tools

Data quality tools exist to automate, monitor, and maintain the health of enterprise data. They ensure that the data feeding your dashboards, reports, and AI models is accurate, consistent, and usable.

Here’s why they’re indispensable:

1. Automation at Scale

Manual checks can’t keep up with growing data volumes. A data quality tool automates validations across datasets, reducing human error and freeing teams to focus on analysis.

2. Proactive Error Detection

Instead of reacting when something breaks, data quality tools detect anomalies in real-time—whether it’s a sudden drop in sales orders or a schema mismatch in a data pipeline.

3. Cost Savings

Research shows that fixing a data error late in the pipeline can cost 10–100x more than catching it early. Automated tools prevent costly downstream issues.

4. Compliance Assurance

Industries like healthcare, finance, and utilities face strict compliance requirements. Data quality tools help organizations maintain audit-ready records at all times.

5. Building Trust in Insights

Leaders need confidence in the data behind every decision. A quality tool ensures the insights they see are grounded in reality—not corrupted by errors.

Without such tools, businesses risk making decisions on incomplete or inaccurate data—an expensive gamble in competitive industries.

What Features Does a Data Quality Tool Need?

The most effective data quality tools share a set of core features designed to address different aspects of scalability, reliability and governance.

1. Data Profiling & Validation

A strong tool should automatically scan datasets to highlight anomalies, missing fields, duplicates, and invalid values. This gives businesses visibility into the health of their data before it enters critical workflows.

2. Data Lineage & Traceability

Data moves across multiple systems—collected in one, transformed in another, and consumed in a third. Lineage allows teams to trace how data flows and changes across pipelines. This makes it easier to identify where an error occurred and how it affects downstream systems.

3. Automated Anomaly Detection

Business environments are dynamic. A sudden spike in website orders might indicate success, but it could also signal a system error. Automated anomaly detection helps differentiate between the two by identifying unexpected shifts in data patterns.

4. Business Rule Enforcement

Every business has unique validation needs. For example, in finance, loan amounts should not exceed set thresholds; in retail, product IDs must be unique. Rule-based checks allow companies to enforce their own business logic across datasets.

5. Integration & Scalability

A data quality tool must connect with multiple data formats, like structured, semi-structured, and unstructured, and support hybrid environments across cloud and on-premises systems. Scalability is essential as data volumes continue to grow.

6. Governance & Compliance Support

With data privacy regulations becoming stricter, governance features are non-negotiable. A robust tool should include compliance-ready frameworks for GDPR, HIPAA, PCI DSS, and other industry regulations.

7. Alerts & Workflow Automation

Detecting errors is only half the job. Teams need real-time alerts and automated workflows to fix issues before they affect decision-making. Integration with communication platforms ensures problems are addressed quickly.

Together, these features form a comprehensive approach to maintaining trust in data across the enterprise.

Data Quality Use Cases

Data quality isn’t abstract; it has real-world business impact. Below are examples of how different industries use it to solve pressing challenges.

- Accurate pricing and promotions: Ensuring product catalogs and prices are consistent across stores, websites, and marketplaces.

- Inventory management: Detecting discrepancies between physical stock and digital records to avoid stockouts or overstocking.

- Customer experience: Eliminating duplicate customer profiles ensures seamless loyalty rewards and targeted marketing.

- Fraud prevention: Validating transaction data helps identify suspicious activities in real-time.

- KYC compliance: Ensuring customer records are complete and consistent across systems avoids regulatory penalties.

- Audit-ready reporting: Clean data means faster, more accurate compliance reporting.

- Patient safety: Incomplete or incorrect patient data can lead to misdiagnosis or treatment errors. Data quality ensures accuracy across records.

- Clinical research: Reliable data from trials supports evidence-based decision-making and regulatory approvals.

- Regulatory compliance: Ensures healthcare organizations meet HIPAA and other privacy standards.

- Order accuracy: Preventing delays caused by missing or inconsistent order records.

- Demand forecasting: Clean data supports accurate predictions, reducing waste and improving margins.

- Supplier management: Ensuring correct data on shipments, invoices, and timelines prevents costly disruptions.

- Billing accuracy: Validating consumption records to prevent disputes and revenue leakage.

- Service monitoring: Ensuring customer usage data is consistent across platforms.

- Regulatory reporting: Maintaining compliance through clean, auditable records.

Across industries, reliable data is directly tied to revenue, compliance, and customer trust.

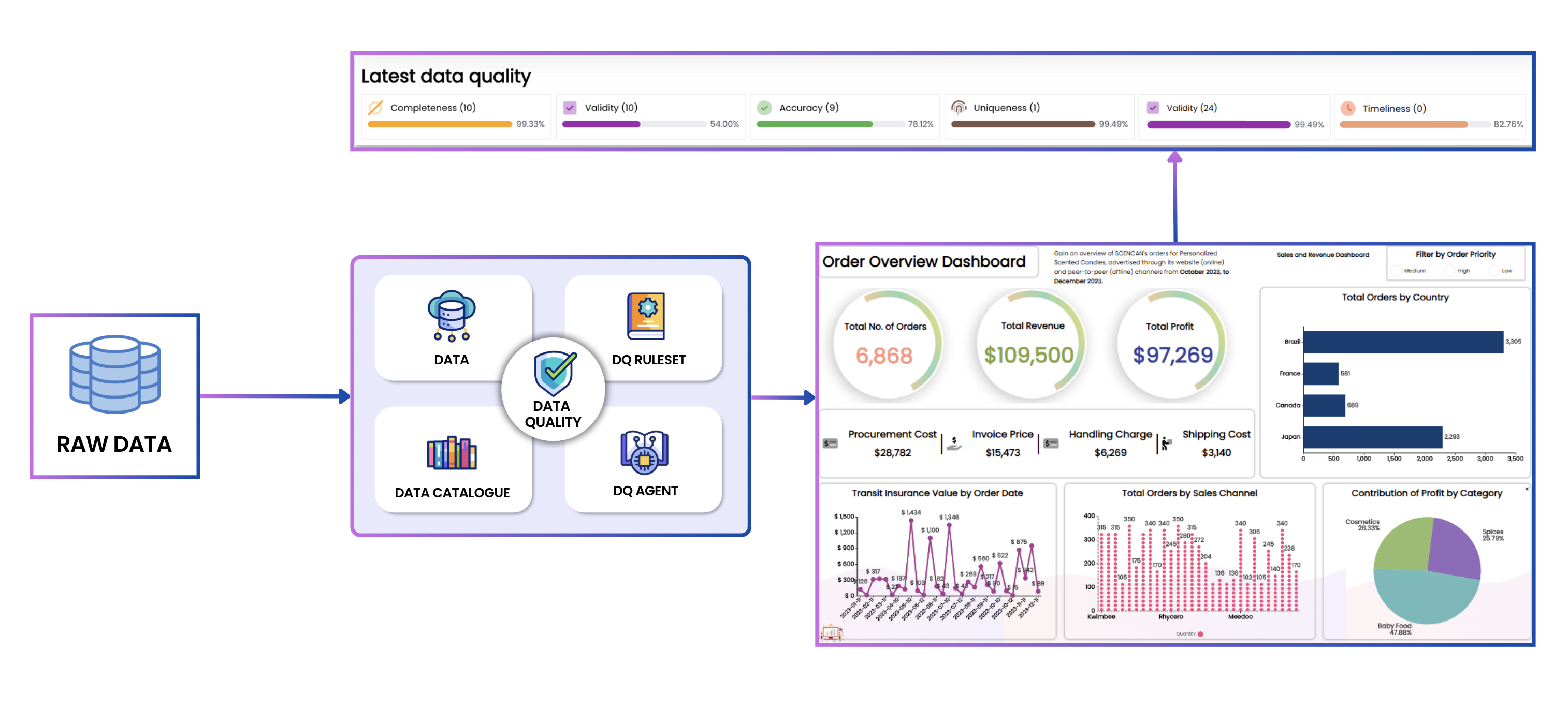

Conclusion: How Infoveave’s Data Quality Platform Can Help Your Organization Maintain Trusted Data

Ask yourself:

- Are your dashboards and reports consistently accurate?

- Do you spend more time fixing data issues than analyzing insights?

- Are compliance risks looming because of incomplete or duplicate records?

If the answer is yes, it’s time to strengthen your data quality foundation.

Infoveave’s Data Quality framework helps enterprises:

- Automate checks and anomaly detection at scale.

- Trace data lineage to quickly resolve errors at the source.

- Ensure compliance with built-in governance capabilities.

- Prevent downstream errors, ensuring reliable reports and dashboards.

- Build trust in data-driven decisions across every department.

Reliable insights start with reliable data. With Infoveave, your organization can reduce risks, optimize operations, and make confident decisions powered by trusted data.

Maintaining data quality isn’t a one-time task—it requires a systematic, scalable framework. Infoveave’s Data Quality platform is designed to address the unique challenges of modern enterprises, ensuring data remains accurate, consistent, and trustworthy at all times.